AI Coding Agents Beyond the Hype

AI coding agents are the newest “wonder tool” in software development, but unlike earlier hype cycles this one is starting to show measurable results, with a few caveats. Software engineers are beginning to work with AI developer tools that can read context, edit files and even trigger deployment checks autonomously.

Agentic AI integration is reshaping workflows, NOT replacing engineers. Coding agents still perform best in routine areas such as refactoring, test generation and documentation, and also handle prototype scaffolding or quick data-model fixes with minimal supervision. They are fast and tireless, but the persistent challenge is that they lack the true judgment or reasoning of experienced human developers.

What AI Coding Agents Can Actually Do Today

A year ago, AI agents in software development still felt like interesting novelties. Now, they’re becoming reliable workflow contributors, powered by better models and tighter IDE integration. Instead of prompting a chatbot in the browser, engineers converse with embedded agents that maintain state across multiple files and apply changes directly inside their editors.

These AI dev tools perform well in a small set of consistent contexts like AI code generation from plain language, refactoring and debugging existing logic, writing or updating documentation and generating tests or configuration files. Tools like Cursor restructure codebases, Claude Code and Windsurf handle multi-module edits and Zed or Kiro focus on speed and collaboration.

Copilot remains a daily companion for incremental work, while teams with stricter security needs prefer solutions on private infrastructure, such as VS Code with Continue.dev and a locally hosted Ollama AI server, a solution we leveraged for one project at DO OK.

The most tangible efficiency gains appear in structured, low-risk tasks like regenerating test cases after API changes, rewriting headers, cleaning syntax or auto-documenting endpoints. Teams report faster issue resolution and smoother onboarding as agents surface context directly from the codebase.

Wherever software work is repetitive, rule-bound or documentation-heavy, autonomous software agents deliver visible acceleration. However, reliability drops once projects start to demand broader architectural insight.

How Developers Are Using AI Coding Agents in Practice

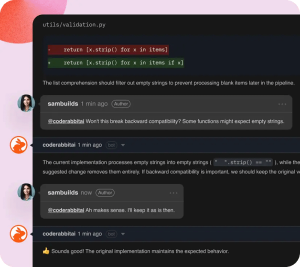

Early experimentation has evolved into systematic workflows embedding agents throughout the development lifecycle. Common integrations include IDE plugins, CLI tools, CI/CD extensions and DevOps platforms like Postman or GitHub. In mature setups, AI DevOps automation tools now sit alongside linters and test runners as standard components, quietly automating routine code maintenance and QA steps.

At DO OK, our teams rely on AI coding agents inside Newcode, where VS code runs with the GitHub Copilot extension and Copilot Pro+ as a core part of our workflow. Claude Code 4.5 is the current leader for multi-file reasoning and debugging, while GitHub’s own coding agent handles early pull-request preparation, coding plans and initial scaffolding. CodeRabbit, an AI code review assistant, rounds out the stack, assisting with automated reviews, improvement suggestions and concise pull-request summaries.

Teams across the wider development ecosystem use agents in similar ways, while human developers keep their focus on architecture and logic. Others use agents to scaffold prototypes or migrate small modules, with data model validation during feature sprints emerging as another common use case.

Benchmarks show targeted delegation can halve testing time or cut documentation lag from days to hours. At Index.dev, teams using Postman with embedded agents reduced manual testing by 60%, while another group using Cursor and Claude Code trimmed their onboarding time from a week to less than two days.

Gains depend on supervision and clear scope. Agents thrive on defined tasks but falter with open-ended goals.

Challenges That Still Hold Back Agentic Development

AI coding agents have made big advances in the last 12 months alone, but they remain limited by how modern models are trained, deployed and governed.

Fundamental limits of current training

Most large models are built on datasets that grow faster than the models improve. But as more AI-generated content enters training datasets, self-training ‘AI slop’ compounds noise. Scaling doesn’t guarantee reliability; beyond a certain point, errors start to reinforce themselves.

We agree with the industry consensus that better models will come from structured, human-curated data and improved reasoning transparency.

Reliability and hallucination

The guiding insight for working with language models is understanding that they don’t reason; they correlate, guessing when uncertain about a given response. This statistical overconfidence explains why even advanced systems confidently produce polished but error-ridden code. Current evaluation methods worsen the issue by rewarding plausible formatting over factual accuracy, encouraging systems that “sound right” instead of “are right.”

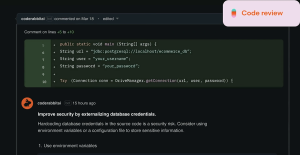

Until benchmarks and objectives change, hallucination will remain endemic, so agents must continue to operate behind validation layers and human review. AI debugging tools that can verify and patch errors autonomously may ease this over time, but the need for robust human oversight persists.

Security and intellectual property

Research from Anthropic in collaboration with the UK AI Safety Institute and the Alan Turing Institute shows that as few as 250 poisoned documents can create a persistent backdoor regardless of model size. Sensitive code should never reach public cloud inference, and local deployments keep operations controlled.

Data and codebase privacy are non-negotiable; even well-intentioned API calls can leak code fragments to third-party services. Some organisations mitigate this through air-gapped open source setups; others sign enterprise privacy agreements, such as the OpenAI Enterprise Privacy program, which guarantees (in theory) that user data isn’t retained or used for training.

Even with strict privacy and security measures, explicit compliance reviews and periodic audits remain hard requirements for using AI in software engineering productivity enhancement.

Infrastructure and skills

Legacy pipelines assume human commits, and testing frameworks break when an agent edits too many files. Until CI/CD adapts, agents will remain partial participants. Developers need skills in prompt design, agent orchestration and AI governance alongside traditional engineering, which is as much a cultural evolution as a technical one. Building AI-ready data pipelines and well-architected infrastructure provides a solid foundation for adoption.

The most effective teams embed AI fluency into daily practice instead of isolating it within research or DevOps units.

How Developers Can Experiment with AI Coding Agents Safely

Safe experimentation requires structure and measurable outcomes. Here are some proven strategies that teams can leverage for working with AI dev tools:

1. Start with low-risk pilot cases

Use non-critical processes where automation speeds up work without risking production: generating test cases, writing documentation, refactoring helpers or automating CI checks. At DO OK we use AI in product discovery, where small structured experiments using automated tools help us define the right business and technical problems before scaling solutions.

2. Apply strong guardrails from the start

Define roles, permissions and audit trails before granting write access. Use version-control hooks to track AI-assisted commits and enforce validation layers. Local or air-gapped setups keep proprietary data inside company networks, which can be complemented with differential privacy tools or sandboxed inference APIs.

AI for embedded development and AI tools for IoT developers require even stricter controls over hardware-level code execution.

3. Measure ROI, not novelty

Track outcomes through time-to-commit, issue-resolution rate or documentation lag to verify genuine gains. When results flatten, adjust prompts or task scope before scaling. Avoid vanity metrics like total lines of code written; they often hide inefficiency rather than prove progress.

4. Build a learning culture, not a dependency

Treat agentic development as an evolving skill; share lessons, test models and analyse failures to avoid overreliance and refine which workflows actually benefit. Teams that iterate transparently on successes and errors develop healthier AI literacy and stronger engineering resilience.

What’s the Next Phase of AI Coding Agents?

The early fascination with multi-agent systems, or AI “colleagues” acting as architect, tester or reviewer has faded. These distributed setups caused cascading errors and were costly to debug. By mid-2025, usage shifted to smaller specialised agents which are easier to monitor and more predictable, as well as cheaper to run. The reason is that small agents fail gracefully, while large agent networks tend to compound mistakes across layers of interaction.

The next step is hybrid deterministic and LLM agents, combining rule-based control with LLM flexibility for creativity and precision. Flatlogic’s hybrid generator, for example, merges intent extraction with AI code generation to deliver production-ready outputs. Future frameworks could synthesize these hybrids directly with CI pipelines to balance speed with accountability, supported by new interoperability standards like Model Context Protocol (MCP).

Our own work on AI visibility and GEO with Rankfor.ai shows how this principle translates into real-world systems aligned with measurable business outcomes.

Human oversight continues to define responsible agentic AI development. Developers curate and validate AI contributions while QA and DevOps teams pivot toward governance and model management. Delivery cycles continue to shorten while experimentation grows.

What It Means for the Future of Software Engineering

Teams that traditionally split roles between coder, tester and reviewer are now sharing them with AI agents in software development that learn from feedback and work around the clock.

Over time, every stage of development will likely include agentic participation, in everything from planning and implementation to AI testing automation and quality assurance. The craft of engineering will evolve from writing instructions for machines to designing systems that co-create. Experimentation will become a defining measure of maturity: organisations that encourage it responsibly will out-learn competitors tied to rigid processes.

Teams that keep humans in control, validating outputs and protecting data, will lead this era. Developers who experiment now, with discipline and curiosity, will define the workflows and ethics that turn AI coding agents from headline hype to standardized practice.

The future belongs to those who automate wisely. At DO OK, we turn emerging tools into reliable practice through our AI & machine learning services. Get in touch and find out how we can help design your next project around real-world AI efficiency and productivity, not hype.