How to Choose Modular Monolith vs. Microservices Architecture

You (probably) don’t need microservices. You need better boundaries.

A senior engineer at DO OK recently made a thought-provoking observation that challenges the prevailing wisdom in our industry: “For many projects, microservices may not be the most effective choice.”

The real value, he explained, isn’t in how you deploy your services, it’s in splitting your application logically. Physical deployment is just a detail. “If you do a physical split without a proper logical split,” he added, “it ends really badly.”

Development teams genuinely need better boundaries, but the mistake is in the enforcement mechanism. Physical deployment (separate repositories, separate services, network calls between them) is the tool they know. Logical boundaries enforced within a single codebase either aren’t familiar or don’t feel “real” enough to stick. So teams reach for the deployment sledgehammer when the problem actually calls for a different kind of discipline.

This might be the biggest reason why so many companies struggle with the modular monolith vs. microservices decision. They’re simply asking the wrong question. The debate shouldn’t be about deployment strategy, it should be about establishing clear boundaries first. Many organisations jump straight to “Should we use microservices?” without fully assessing modular monolith architecture, which can solve the same fundamental problems with significantly less complexity.

Understanding Modular Monolith Architecture

Before comparing architectures, let’s define what modular monolith architecture actually consists of. It’s not a traditional monolith with better code organization; it’s software architecture organized into well-defined, independent modules, each with clear bounded contexts, a concept from domain-driven design that defines where one business capability ends and another begins.

These modules deploy as a single unit but are structured internally like microservices. Each module owns its data, exposes clear interfaces, and could be extracted into an independent service with minimal rework. You can see it as microservices discipline without the complexity.

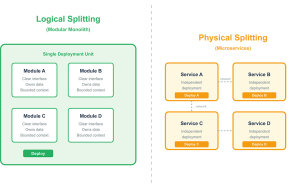

The critical distinction that some architecture discussions miss is the difference between logical and physical splitting. Logical splitting means defining clear module boundaries, explicit interfaces, and strict data ownership rules. Physical splitting means deploying those modules as separate services with independent deployment pipelines.You can have logical splitting without physical splitting; that’s a modular monolith. You cannot succeed with physical splitting without logical splitting; that becomes a distributed monolith, a far more costly outcome. The distinction matters because roughly 80% of microservices benefits come from logical boundaries, not independent deployment. Modular monoliths deliver clear ownership, independent development, and extractable components, without distributed systems complexity, network latency, or operational overhead.

Logical vs Physical Splitting

Logical boundaries give you everything that matters for software architecture quality: service boundaries based on business capabilities, independent team development without constant coordination, and a clear path to microservices extraction when scaling needs genuinely emerge. The hard work (defining boundaries) is already done, so extraction doesn’t require a full rewrite.

Physical deployment adds specific capabilities at significant cost. The main benefits are:

- Independent scaling of individual modules

- Deployment independence without coordinating releases

- Technology diversity across services

But the costs add up, via:

- Distributed systems complexity

- Network reliability concerns

- Data consistency challenges

- 2-3x infrastructure expenses

- 3-6 month productivity dip during transition

So do you actually need those benefits yet? For the majority of software projects, the honest answer is probably no.

The alternative (and the expensive mistake we see repeatedly) is the distributed monolith. Services share databases and call each other synchronously in long chains, developing circular dependencies because boundaries don’t align with business capabilities. You end up with all the complexity of distributed systems and none of the benefits. You’re debugging across network boundaries and managing deployment coordination despite “independent” services, while paying 2-3x infrastructure costs for worse performance than your original monolith.

The evidence is compelling. Google’s research identifies five principal microservices challenges:

- Performance overhead

- Correctness difficulties

- Management complexity

- Reduced development speed

- Operational cost

Segment publicly moved from microservices back to a monolith, citing reduced complexity. Amazon Prime Video consolidated distributed components into a monolith, reducing costs by 90% while improving performance.

These aren’t failures of microservices architecture itself. They’re evidence that physical deployment is an optimization you apply after establishing logical boundaries, not a shortcut to good architecture.

Practical Decision Framework for Each Architecture

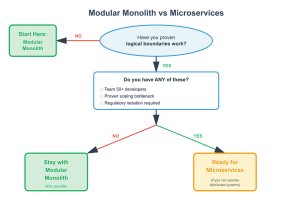

So when should you actually choose modular monolith architecture versus microservices? Here’s an honest framework to apply based on observable conditions:

Start with Modular Monolith Architecture When…

Your team numbers fewer than 30-40 developers. Below this threshold, single deployment pipeline overhead is less than distributed systems complexity. Teams work on independent modules with clear boundaries but deploy together, which is faster and simpler than orchestrating dozens of services.

You’re still learning the domain. Boundaries evolve early in a project. Refactoring modules within a monolith is straightforward, but rearchitecting microservices requires data migration and API versioning coordination. Start modular, extract later when boundaries stabilise.

DevOps maturity is limited. Microservices require robust CI/CD, containerisation platforms, distributed tracing, centralised logging, and service meshes. If you lack these capabilities, or building them consumes more resources than your application, you’re not ready for the operational burden.

Cost sensitivity matters in your current stage. Modular monoliths cost 2-3x less than microservices. You don’t need API gateways, service discovery, or distributed tracing. Run on a single server cluster rather than managing dozens of containerised services.

Move to Microservices Architecture When…

You’ve already established logical boundaries in your modular monolith. If you can’t maintain boundaries within a single codebase, network boundaries will make things worse. Well-defined modules become microservices candidates, and clear interfaces become API contracts. The extraction is straightforward because the hard work is done.

Specific modules have genuinely different scaling characteristics, and your modular monolith can’t address them. A well-built modular monolith can support deploying specific modules independently through various platform-specific configurations, like HTTP routing at the proxy layer or event-driven selective loading. Per-module scaling alone isn’t sufficient justification for microservices.

Your team has grown beyond 50+ developers across multiple autonomous teams. At this scale, monolith deployment coordination becomes a bottleneck. Teams wait for others to finish. Release schedules require extensive coordination. Productivity loss from coordination exceeds distributed systems complexity.

Regulatory requirements demand service isolation. Sometimes the decision is compliance-driven. PCI compliance may require payment processing separation. GDPR might demand data residency controls. Industry regulations mandate security boundaries. Physical service separation then becomes necessary regardless of team size or scaling needs.

Never Choose Microservices When…

If “it’s what Netflix does” is your primary rationale, STOP. Netflix’s scale almost certainly doesn’t match yours. If you haven’t attempted a modular monolith first, you’re skipping the step that makes microservices viable. If technical debt is your problem, microservices will amplify it, not solve it.

Avoiding Expensive Architecture Mistakes

Understanding when to use each architecture matters less if you fall into common traps that sabotage either approach. Here are the biggest mistakes that consistently derail architecture decisions:

Physical splitting without logical boundaries. When you deploy microservices that share databases, have circular dependencies, or require coordinated releases, you’ve created a distributed monolith: maximum complexity with minimum benefit. The fix isn’t better deployment tools, it’s establishing bounded contexts through domain-driven design before deploying.

Treating deployment as architecture. Microservices don’t automatically produce good architecture any more than buying expensive tools makes you a craftsman. Architecture quality comes from domain understanding, clear boundaries, and disciplined interfaces, regardless of how you deploy. A well-architected modular monolith beats a poorly-architected microservices system every time.

Migrating to escape technical debt. Technical debt doesn’t disappear when you adopt microservices. It follows you into the new architecture with added debugging complexity across network boundaries. If your monolith’s boundaries are unclear, fix that first. Establish clear modules and pay down debt, then consider physical deployment.

Underestimating operational complexity. Distributed systems require fundamentally different operational capabilities: distributed tracing, service discovery, sophisticated network monitoring, data consistency strategies, and teams experienced in debugging distributed failures. Building this capability takes significant investment. Don’t deploy microservices until you can operate them effectively.

Premature optimization. Choosing microservices for hypothetical future scale means paying real costs for benefits you might never need. Below approximately 50 developers, coordination overhead typically exceeds monolith complexity. Start with a modular monolith and optimize when you have evidence: actual scaling bottlenecks, measured performance problems, demonstrated coordination issues. Scale architecture as your team grows, not as you hope it might.

Start with Logical Boundaries, Not Deployment Strategy

The decision gets easier when you focus on what actually matters. First, establish bounded contexts using domain-driven design; your business capabilities and natural boundaries. Second, build modular monolith architecture with well-defined service boundaries: clear interfaces, data ownership, minimal coupling. Third, validate that modules are truly independent, and guard that independence with automated architecture tests, not just code review or team conventions.

This matters more than it might seem: unfamiliarity with these automated approaches is one of the more common reasons teams end up splitting into separate repositories to enforce discipline, which then leads almost inevitably to microservices. The physical split becomes a workaround for a tooling gap. If your pipeline catches boundary violations automatically, you don’t need separate deployments to make the boundaries real.

Only when logical boundaries are proven in practice should you consider physical deployment. Your signals should be different scaling needs, team size beyond coordination capacity, or regulatory isolation requirements. If none of those apply, you’ve already captured most of the value.

Most companies don’t need microservices architecture. Those that eventually do usually aren’t ready when they think they are. Success comes from architecture discipline, not deployment topology.

Optimise for Clarity Before Complexity

Logical splitting delivers roughly 80% of the benefits with 20% of the complexity. For the vast majority of software projects, that’s the right trade-off. When you’re working on complex systems, like IoT platforms processing millions of events, legacy modernization with decades of technical debt, or applications in highly regulated industries, the decisions get trickier. In these cases, partnering with teams like ours at DO OK who have explored both approaches in production can help you avoid expensive mistakes and wasted months of effort.

The most expensive architecture mistakes tend to happen early, when the pressure to ship overrides the discipline to define boundaries properly. If you’d rather avoid that conversation eighteen months from now, let’s have a different one today.

Reach out to speak with our team if you’re weighing up an architecture decision, or just want a second opinion from a team that’s seen both approaches in production.