Regulatory pressure on Europe’s energy and IoT sectors is growing fast. The Energy Efficiency Directive (EED), Energy Performance of Buildings Directive (EPBD) and Corporate Sustainability Reporting Directive (CSRD) all demand structured, traceable and high quality energy data. Systems engineers know that this is exactly the same foundation you need for effective AI.

Still, many companies rush to deploy AI without first verifying that their data pipelines can meet either compliance or operational standards. This leads to AI pilots that stall, reporting that regulators can’t verify and technology investments that underdeliver.

The models aren’t the issue: the inputs are. Without granular, timestamped and data rich in context, even the smartest algorithms misfire and your compliance reporting will rest on uncertain footing.

AI-ready energy data pipelines are the common denominator for meeting new EU requirements and achieving real-world ROI. So what makes metering “reliable,” and how do you structure a pipeline that serves both regulators and algorithms? Let’s unpack the issue.

This article was originally published on Medium.com

Energy companies have no shortage of AI ambitions. Predictive maintenance, load control, grid optimisation and more, a veritable rainbow of cost-saving innovations. But most projects fail to scale, and poor-quality data is almost always the culprit.

High-impact AI relies on clean, high-frequency, context-rich inputs. Real-world feeds tend toward sparse, noisy or siloed, coming from IoT systems never designed for machine learning. That leads to false anomaly alerts, overactive controls, dashboards no one trusts and teams losing confidence in their tools.

Now, forward-thinking teams are starting to ask a different question:

“Does our data deserve AI?”

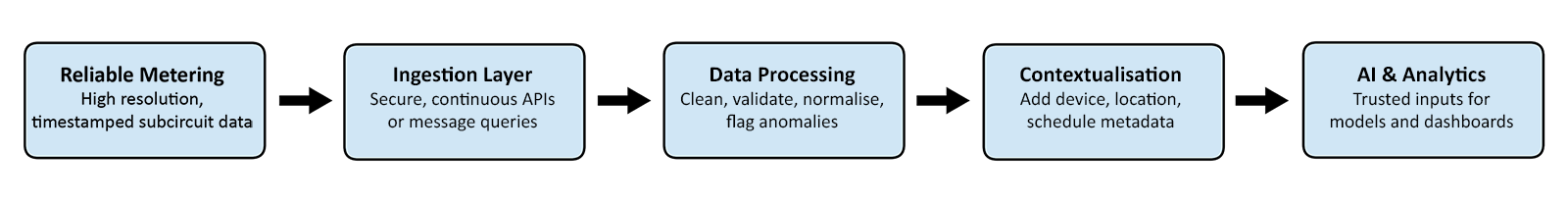

An AI-ready pipeline begins with reliable, submetered, timestamped and context aware, and continues through ingestion, processing and validation to deliver clean, continuous, machine readable data.

Smart meters provide valuable insights, but for AI they need to go deeper. Most record building level usage in 15-minute intervals; fine for billing or usage audits, but not for predictive models that need circuit level, high frequency data rich in context.

AI works best when it knows not just how much energy was used, but what used it, when and why. Many IoT systems fall short: sensors without timestamps, siloed data and unstructured formats produce more noise than useful insight.

The main requirements for AI-ready metering are:

- Granularity: sub-circuit level (e.g. HVAC, elevators, chillers)

- Frequency: real-time or high-frequency (1s or better)

- Metadata: device, location, schedule and operational context

- Format: structured, consistent and ready for automated processing

| Criteria | Basic Smart Metering | Reliable AI-driven Metering |

| Granularity | Building-level | Sub-circuit level |

| Sampling Frequency | Every 15 minutes (typical) | Real-time or high-frequency (e.g. 1s) |

| Level of Detail | Total consumption only | System-specific and timestamped |

| Data Format | Aggregated reports | Structured time series |

| Context/Metadata | Minimal or none | Tagged with device, location, and schedule |

| Readiness for AI | Limited | High |

A good energy AI system starts with a solid data backbone. Instead of sensors blindly dumping numbers into the cloud, you need a layered setup built for reliability, traceability and machine readability:

1. Reliable metering: high resolution, timestamped subcircuit data captured at the edge via smart sensors and meters.

2. Ingestion layer: data streamed continuously and losslessly via message queues, APIs, or middleware.

3. Processing: cleaning, normalising, validating, and flagging anomalies while enforcing consistent structure.

4. Contextualisation: adding equipment type, location, schedule, and other metadata so AI can interpret a full range of inputs.

5. AI & analytics: clean, contextualised data feeding models, automation, and dashboards for actionable insights.

When each stage feeds smoothly into the next, you get trustworthy outputs and the flexibility to scale or swap out components without rebuilding the whole system.

The Nowy Rynek E office building in Poznań, Poland, developed by Skanska, is a great example of how AI-ready data pipelines and IoT-driven building systems can deliver both operational savings and compliance with Europe’s rising sustainability standards.

Commercial offices are among the most resource-intensive parts of the built environment. Skanska recognized that traditional building management systems lack the granularity to optimise efficiency or provide audit ready data. To tackle this challenge, they partnered with Exergio to develop a complete solution for their Nowy Rynek E project, integrating high-frequency IoT metering with Exergio’s AI platform.

Predictive algorithms continuously adjust HVAC and lighting to match occupancy and weather forecasts, while smart LEDs, chilled beams, and advanced filtration ensure comfort and air quality. Water systems reuse rainwater and greywater, cutting potable use by 64%. The building is fully powered by renewables, supported by on-site photovoltaics.

The results speak for themselves:

- 20% reduction in energy waste in under a year

- €80,000 saved within the first nine months

- 30% lower overall energy use compared to standard offices

- LEED Core & Shell platinum certification, with WELL and WELL Health-Safety recognition

Nowy Rynek E proves that structured, timestamped data pipelines can make AI effective while enabling compliance with Europe’s toughest sustainability frameworks, delivering measurable ROI and long-term value.

Energy and utility AI initiatives don’t usually fail because the technology is lacking, but because the data feeding it isn’t trusted or usable. AI fails fastest when it has the wrong inputs: poorly structured IoT data can trigger false alarms, misfire automation and waste maintenance resources.

For example, one miscalibrated CO₂ sensor can keep an HVAC system running at full tilt all day, driving up energy use instead of reducing it. IBM research shows generic AI models often miss subtle, entity specific patterns, like two identical chillers behaving differently due to location or schedule. Without granular, contextualised metering, AI sees only noise and acts accordingly.

For companies that get this right, the returns speak for themselves:

- Shell cut unplanned downtime by 35% with predictive maintenance

- BP saves around $10M annually with AI monitoring

- Capalo AI helps battery storage operators maximise earnings with predictive dispatch

Regulation is pushing the same direction. The EED, EPRD, and CSRD all demand structured, traceable, auditable energy data. Meeting these standards requires timestamped pipelines rich in context that auditors and regulators can follow end to end.

Companies that build vendor-neutral, API first infrastructure gain flexibility to deploy future tools without costly rewrites or lock-in. Clean, high quality data today means lower costs, better AI performance and fewer compliance risks tomorrow.

You don’t need a full system rebuild to get AI ready, just a deliberate upgrade in data maturity. Following a few best practices will keep your AI ambitions grounded in reality so that every model is fed with data it can actually leverage:

Map what’s collected, identify gaps and prioritise high frequency subcircuit data that can be tied to specific assets or zones.

Use consistent naming, metadata and time series formats. Keep your ingestion pipeline moving clean data reliably from edge sensors to storage without loss.

Test AI where clean data already exists, such as anomaly detection or tenant-level metering, and measure cost savings, downtime reduction and/or emissions impact.

Implement access controls, versioning and vendor-neutral architectures so you can adapt tools without costly rewrites or compliance headaches.

Energy companies don’t need more AI hype, they need better data foundations. What drives ROI is the discipline of clean metering, high frequency pipelines and contextualised data, not complex models.

The good news is that many organisations already have the beginnings of an infrastructure fit for AI in place. But without deliberate planning, scale and traceability, that data won’t go far.

Before investing in new tools, start with the question that really matters: Does your data deserve AI?

If the answer is yes, or even almost, you're closer to measurable results than you think.

Let’s explore how your existing infrastructure can be strengthened for AI-ready performance — get in touch with our team to discuss your data pipeline maturity and next steps.

-min-cropped.png)